Does your Brain Represent the Outside World?

The Rise (and Trouble) of Representationalism in Neuroscience

Take a look at the sentence you’re reading right now. It’s just a string of shapes on a screen.

But somehow, those shapes turn into words. And those words turn into ideas — ideas about something.

How does that happen?

Cognitive scientists have a go-to answer: your brain represents them.1 Somewhere in your head, there’s a pattern of neural activity that stands in for dog. A different one activates for tree. Another for jealousy. In other words, certain brain activity has content that is about something.2

If you read through academic papers, you’ll find sentences like, “neurons in the human medial temporal lobe represent concepts,” or “neurons in the mammalian visual cortex represent edges in the visual field.”

This way of thinking — that the brain builds internal representations — is one of the most powerful and persistent ideas in cognitive science. It’s been used to explain everything from memory to language to decision-making. It’s the core idea behind much of cognitive neuroscience.

But there’s something strange here: for a concept so widely used, it’s surprisingly hard to pin down. What exactly is a representation?

Some philosophers — and increasingly, some scientists — are starting to push back. They argue that we’ve gotten a little too comfortable with this idea.3 That, despite all the talk, representations might not actually explain how brains work at all.

So this week, let’s take a closer look. Is representational thinking a deep insight into how the brain works? Or is it a metaphor we’ve mistaken for a mechanism?

To find out, we’ll ask three questions:

What does it mean to say the brain represents something?

Why has this idea become so dominant in psychology and neuroscience?

What do the critics say?

A quick note before we get started:

This is Essay 4 in The Trouble with Meaning series. You don’t need to read the earlier ones to follow along, but if you’re curious:

Essay 1 looked at Searle’s Chinese Room.

Essay 2 explored symbolic communication (with a little help from aliens).

Essay 3 tackled the grounding problem — how words get their meaning.

Last week, I introduced a simple framework for thinking about representations: they typically have three parts — a vehicle, a target, and a consumer.

The vehicle is what carries the message — maybe the letters d-o-g, a pattern of brain activity, or a string of numbers inside a language model.

The target is what it’s about — a real dog, an idea of a dog, or a fictional one.

And the consumer is whatever system uses that connection to do something useful: answer a question, guide behaviour, make a prediction.

This week, I’m focusing on one kind of vehicle in particular: brain activity.

When neuroscientists say the brain represents something — what exactly are they claiming? And how solid is that claim?

Q1. What Does it Mean to Say the Brain Represents Something?

When scientists say your brain represents something — let’s say a dog — they don’t mean your brain is literally holding a tiny photograph of a dog. There’s no little projector in your head beaming a mental slideshow.

What they mean is that some pattern in your brain — a specific configuration of neural activity — is standing in for that dog.4 It’s a signal that points to something. It’s about something. It carries content.

Those words — about and content — are doing a lot of work here. They hint at something big: that your brain activity doesn’t just happen, it means something. It refers beyond itself — to things you see, remember, or imagine.

Take vision, for example. When you look at a dog, light hits your retinas and triggers a cascade of neural signals. The information from your retina moves through the brain — from simple edge detection in the primary visual cortex to higher-level regions that process other things like its texture, shape, colour, movement, and exact place in space.

So, in this view, perception is a process of constructing an internal model of the world — a model that allows us to recognise objects, predict outcomes, and make decisions.

And representations are not limited to what our senses represent. Representations show up in theories of memory (where we store representations of the past), language (where words represent concepts), and reasoning (where we manipulate abstract representations to draw conclusions). In each case, thinking is sometimes framed as a kind of internal workspace, where symbols, patterns, or codes do the heavy lifting.5

That’s the basic picture: your brain builds internal versions of the world — and then uses those versions to perceive, remember, think, feel, and act.

But as we’ll see, this way of thinking carries some philosophical baggage. Before we unpack that, though, let’s look at why representational thinking is so popular in the cognitive sciences.

Q2. Why is Representational Thinking so Dominant in the Cognitive Sciences?

It might seem like scientists have always thought of the brain as building internal models of the world. But that hasn’t always been the case.

For much of the early 20th century, psychology took a very different approach: behaviorism.6

Behaviourists didn’t want to talk about minds at all. If you couldn’t see it or measure it, it didn’t count. Psychology, they argued, should stick to what could be observed: stimulus and response. Internal thoughts, feelings — and yes, representations — were off-limits.

But by the 1950s and ‘60s, cracks were starting to show in the behaviourist idea.7

There were just too many things it couldn’t explain. People could understand sentences they’d never heard before. They could imagine new futures, solve problems they hadn’t been trained on, and plan ahead. Clearly, something was going on inside the head — and behaviourism didn’t have the tools to deal with it.

So the field shifted.

Behaviourism was replaced by cognitivism.

Inspired by the rise of computers, psychologists began to think of the brain as an information processor — something that takes in data, stores it, transforms it, and uses it to generate behaviour. Just as a computer relies on data structures like files and variables, the brain needs its own internal information. That’s where representations come in.

Representationalism is, therefore, a central pillar of the Computational Theory of Mind.

Representational thinking offered a way to study the mind within a scientific framework. It allowed researchers to build models of memory, perception, and reasoning — using math and formal logic. Suddenly, fuzzy concepts had structure. Theories could be tested. The brain-as-representation-machine became a powerful way to make sense of behaviour.

Why? Because the idea seemed to fit the evidence.

So let’s take a quick tour of some of that evidence.

Rats in Mazes

One classic example comes from a 1996 study by Packard and McGaugh.8 The setup was a cross-shaped maze with four arms, north, south, east, and west. During training, rats were always placed in the south arm, the food was placed in the west arm, and the north arm was blocked with a clear Plexiglas barrier.

If a rat turned left at the intersection, it found the food. If it turned right, it didn’t. Over seven days, the rats learned how to find food — and they got very good at it.

Then on the eighth day, researchers flipped things: they placed the rats in the north arm and blocked the south arm.

This was the critical test: if behaviourism is correct, the rats would have learned using a stimulus-response rule. Perhaps something like “turn left to get food”. In that case, they should continue to turn left — which would now take them to the east arm.

But if the rats had formed a spatial map — an internal representation of the maze — they would turn right instead, heading for the same location in space where they previously found food.

What happened?

The rats turned right.

They weren’t just following a habit. They seemed to understand the space — to hold a mental representation of the maze.

Illusions

Representational thinking also helps explain quirks in human perception.

Take the Kanizsa triangle. Your brain sees a white triangle that isn’t really there. And the duck-rabbit illusion shows how the same image can flip between two different interpretations. In both cases, there seems to be a gap between what’s on the page and how you see it. Some take this as a sign that the brain is representing what we see.

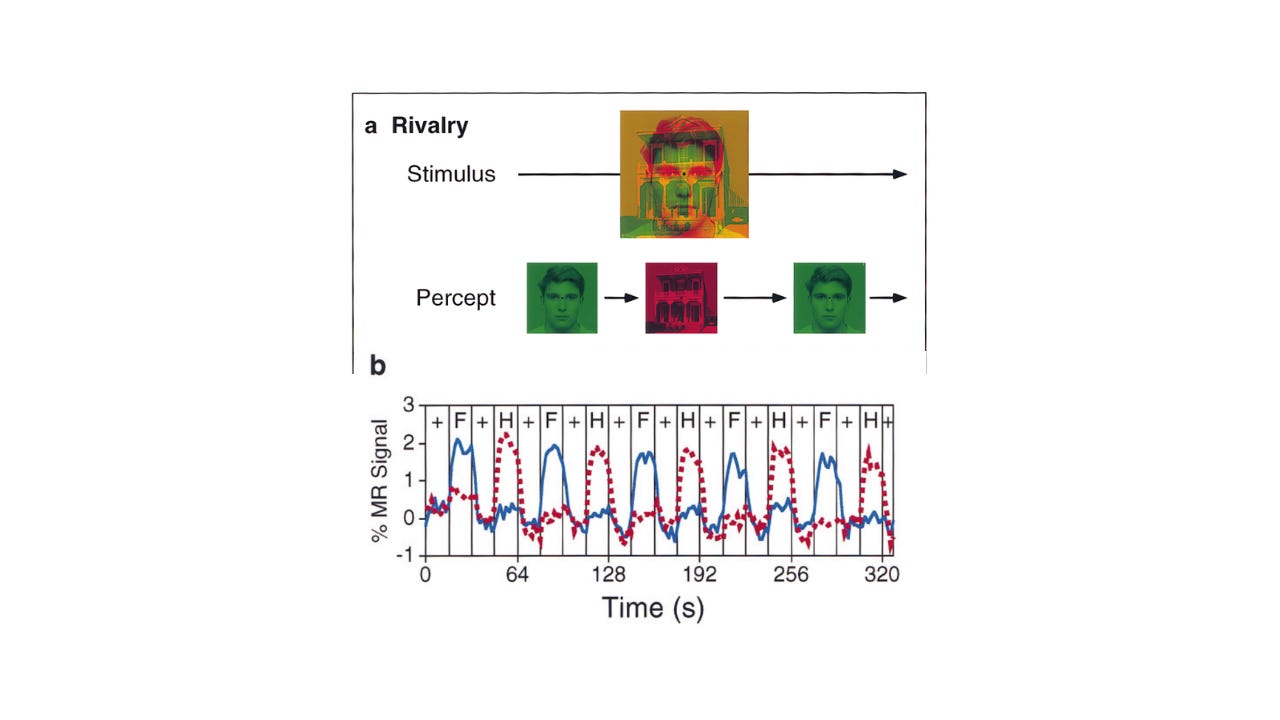

Another striking example is called binocular rivalry. If you show one image to your left eye — say, a house — and a different one to your right — say, a face — your brain doesn’t blend them. Instead, your perception flips between seeing the face, and then seeing the house.

In a famous fMRI study, researchers found that activity in one area of the brain was stronger when people saw the face, and activity in a different area was stronger when they saw the house — even though the input to the eyes never changed.9 So if the images didn’t change, what did change? It was the internal representation, right?

The Blind Spot

Your eye has a blind spot — the place where the optic nerve exits the retina. There are no receptors there. Technically, you shouldn’t be able to see anything in that patch of space.

But you don’t see a hole in your vision.

Area V1 in your visual cortex is laid out like a map that corresponds to areas on your retina. This means that different parts of V1 correspond to specific locations on the retina.

For a long time, researchers assumed there was no region of V1 corresponding to the blind spot. Why would there be!? The brain wasn’t receiving any input from that part of the retina.

But newer studies suggest otherwise10. There is a region of V1 that responds to the blind spot. It isn’t missing. And it isn’t silent. What it seems to be doing is ‘filling in’ that area based on the surrounding visual context.11

Representational thinking is the winning framework in cognitive science for a reason.

It has allowed scientists to build models, design experiments, and explain behaviour like no other idea has before. It’s turned the mind into something we can model, measure, and manipulate.

It has won because representational thinking fits the evidence.

But hold up.

Just because something fits doesn’t mean it’s the right explanation.

Let’s turn now to the critics — and take a closer look at some of the key criticisms of representational thinking.

Q3. What Do the Critics Say About Representationalism?

For such a popular idea, representational thinking has drawn a lot of criticism. One reason is that the term representation doesn’t have a single, clear meaning. It gets used in different ways by different people. So, when critics take aim, they’re often shooting at different versions of the idea.

Still, there are some common concerns. Let’s walk through four of the big ones.

1. Reverse Inference

Just because a brain pattern shows up when you see a dog doesn’t mean it represents a dog. Remember correlation does not equal causation.

When psychology shifted from behaviourism to cognitivism, it brought with it a vocabulary: attention, memory, reasoning, emotion. Neuroscientists picked up that vocabulary and went looking for where those things “live” in the brain.

Volunteers were put in fMRI machines and shown pictures. They found patches of brain that respond more strongly for some category of images — say faces — compared to another category of images — say houses. For faces, they triumphantly named the area the fusiform face area. And claimed, this is the spot where “the brain represents faces.” They did a similar thing for places.

Soon we had all sorts of areas — a word area, a fear centre, a reward pathway, a value hub, an empathy centre, a pain signature, a speech production module, and even a love centre.

But there is a problem here.

It is a classic example of reverse inference.12

Critics argue that these kind of early experiments in cognitive neuroscience — the ones that correlate brain responses to psychological terms — do not demonstrate that those brain responses are representations of those psychological terms. They don’t even demonstrate that such psychological terms exist.

One problem is that the area we’ve labeled the face area sometimes responds more strongly to things that are not faces. And, consequently, there’s currently a lively debate about what the fusiform face area actually does.13

So critics warn that when we say the brain represents something, we need to be careful we are not simply projecting our psychological categories onto patterns of activity and calling it a representational discovery.

2. Brains are Not Digital Computers

Another worry is that representational thinking borrows too heavily from computer metaphors.

Brains aren’t digital machines. They don’t run lines of code. They’re biological systems — wet, noisy, and constantly changing.

Some neuroscientists argue that the idea of neural coding is misleading. The brain doesn’t so much send messages as it participates in complex, dynamic loops.

Critics argue that we’re looking at the mind through the wrong lens. Representations might be one useful tool — but it can’t be the whole story. And if we keep trying to force everything through that framework, we are likely to miss how the brain actually works.

3. The Grounding Problem

Then there’s the problem we tackled last week: how do representations get their meaning?

What makes a particular pattern of neural activity about a dog? Why does another one refer to a tree or a memory or a future plan?

This is the grounding problem. If representations are just patterns in the brain, what ties them to things in the world? Without a link between a representation and what it refers to — between brain pattern and real-world content — we’re left with a pile of patterns and no meaning.

4. Misrepresentations

Finally, there’s the misrepresentation problem.

Imagine you glance out the window and think you see a dog in the garden. Later, you realise it was just a watering can — with a handle that looked a little too much like a tail.

Was your brain wrong?

It seems like is was wrong.

You might shrug and say, sure, misrepresentations happen. What’s the big deal?

Here’s the puzzle: what allows you to say some representations are true and some representations are mistakes?

The misrepresentation problem is related to the reverse inference problem and the grounding problem.

To see why, let’s start with something familiar: like the weather.

Let’s say, while inside your home, you check the weather forecast on your phone. There’s a bright little sun icon over your city.

But when you step outside, it’s not sunny. It’s actually pouring rain.

The forecast was wrong. And you can say that because we’ve all agreed — conventionally — that the sun icon stands for sunny skies. If that icon sometimes stands for sunshine, sometimes for snow, and sometimes for it’s Tuesday, there’d be no way to judge when it got things right or wrong.

Now, back to the brain.

Let’s say there’s a neural pattern — call it P — that reliably shows up when you see a dog. So, we say, P represents dog. What do we say about the time you thought you saw a dog, but it was actually a watering can? There was no dog, but P activated.

Did your brain misrepresent the watering can as a dog?

If P truly means dog, then yes, it misrepresented.

But here’s the problem: you only know it misrepresented if you’ve already accepted that P represents dog — and that’s the very thing we’re trying to prove.

Let’s spell out the logic:

Premise 1: If DOG is present, then P activates.

Premise 2: If P activates, then DOG is present (⇒ P represents DOG).

Conclusion 1: Therefore, P represents DOG. (from P1 & P2)

Premise 3: P activates when a watering can (¬DOG) is present.

Premise 4: If P activates without DOG, that’s a mis-representation.

Conclusion 2: Therefore, P misrepresents DOG. (from P3 & P4, but only if C1 holds)

Here’s the problem: Conclusion 2 only works if Conclusion 1 is already solid. And Conclusion 1 relies on Premise 1 — that P is active for dogs — which Premise 3 now undermines.

But to make matters worse, Premise 2 smuggles in the very content relation that we are trying to justify. So the argument assumes the very thing it’s supposed to explain.

In other words, the whole argument loops back on itself. It’s circular.

Misrepresentation only makes sense if successful representation is already grounded (P1 + P2 → C1), but that grounding itself rests on the reverse-inference move in P2 — exactly the assumption critics say is unjustified.

So where does that leave us?

Critics insist that until we have a non-circular way to say why a neural pattern means dog, all this talk of representations — of concepts, ideas, thoughts, or grounding — is just hand-waving. Calling a watering-can activation a dog error might sound scientific, but only because we’ve already baked in the very meaning we’re trying to prove.

That’s why misrepresentation is often treated as a litmus test. A convincing representational theory of mind can’t just explain how the brain gets things right; it also has to show how the same machinery can get things wrong and still count as representing the world.

So, is there a representational theory that passes the misrepresentation test?

Can we talk about representation without sneaking in assumptions? Or do we have to admit that the feeling of aboutness is an illusion?14

Next Week…

Let’s start to take a look at some of the main theory contenders — and see how they hold up.

Nick Shea’s Representation in Cognitive Science is a key resource on this topic.

Calvo Tapia, C., Tyukin, I. & Makarov, V.A. Universal principles justify the existence of concept cells. Sci Rep 10, 7889 (2020). https://doi.org/10.1038/s41598-020-64466-7

For example:

Facchin, M. Neural representations unobserved—or: a dilemma for the cognitive neuroscience revolution. Synthese 203, 7 (2024). https://doi.org/10.1007/s11229-023-04418-6

Cole, D. Anthony Chemero: Radical Embodied Cognitive Science. Minds & Machines 20, 475–479 (2010). https://doi.org/10.1007/s11023-010-9194-y

Sometimes this pattern is called a neural code or population code.

E.g., Baddeley’s central executive, Adaptive Control of Thought-Rational (ACT-R), Global Workspace Theory.

Watson (1913) & Skinner (1930s-1950s) defined the movement

Chomsky’s 1959 review of Skinner’s Verbal Behavior is the canonical turning-point.

Packard, M. G., & McGaugh, J. L. (1996). Inactivation of hippocampus or caudate nucleus with lidocaine differentially affects expression of place and response learning. Neurobiology of learning and memory, 65(1), 65–72. https://doi.org/10.1006/nlme.1996.0007

Tong, F., Nakayama, K., Vaughan, J. Thomas., & Kanwisher, N. (1998). Binocular Rivalry and Visual Awareness in Human Extrastriate Cortex. Neuron, 21(4), 753–759. https://doi.org/10.1016/s0896-6273(00)80592-9

E.g., Komatsu, H., Kinoshita, M., & Murakami, I. (2000). Neural responses in the retinotopic representation of the blind spot in the macaque V1 to stimuli for perceptual filling-in. Journal of Neuroscience. https://www.jneurosci.org/content/20/24/9310

de Hollander, G., van der Zwaag, W., Qian, C., Zhang, P., & Knapen, T. (2021). Ultra-high field fMRI reveals origins of feedforward and feedback activity within laminae of human ocular dominance columns. NeuroImage, 228, 117683. https://doi.org/10.1016/j.neuroimage.2020.117683

Researchers disagree on how to interpret this “filling-in” activity. It’s an interesting debate, which I will return to in an upcoming essay.

Poldrack, R. A. (2006). Can cognitive processes be inferred from neuroimaging data? Trends in Cognitive Sciences, 10(2), 59–63. https://doi.org/10.1016/j.tics.2005.12.004

For an opposing view see:

Hutzler, F. (2014). Reverse inference is not a fallacy per se: Cognitive processes can be inferred from functional imaging data. NeuroImage, 84, 1061–1069. https://doi.org/10.1016/j.neuroimage.2012.12.075

Merim Bilalić; Revisiting the Role of the Fusiform Face Area in Expertise. J Cogn Neurosci 2016; 28 (9): 1345–1357. doi: https://doi.org/10.1162/jocn_a_00974

Burns, E. J., Arnold, T., & Bukach, C. M. (2019). P-curving the fusiform face area: Meta-analyses support the expertise hypothesis. Neuroscience & Biobehavioral Reviews, 104, 209–221. https://doi.org/10.1016/j.neubiorev.2019.07.003

Yes — that is a false dichotomy. We don’t have to choose between talking about representation without any assumptions and declaring aboutness an illusion. There are other options.

I've historically been suspicious about the "filling in" phrase for the blind spot, but I hadn't heard that there was an active V1 area corresponding to it. Interesting.

Based on what I've read, I tend to think representational thinking is fine, as long as we don't get hung up on the idea that it's a contiguous area in the brain, but instead understand it as a network of activations, portions of which might light up for different representations.

And it pays to think about what representations are more fundamentally. Here I think the predictive coding theories have a lot going for them. In that case, a misrepresentation is a prediction error, which isn't a problem since all predictions are probabilistic. And representational drift (another phenomenon I've seen used to challenge representationalism) is the prediction getting fine tuned and adjusted over time.

But I definitely think we have to be careful about importing too much from digital computing. I get what the dynamical system folks are concerned about. Many of the concepts we bring over, like the idea of information being exchanged between regions, is probably only a hazy approximation of the causal effects propagating through the messy biology.

I will note that the word "representation" has long bugged me. It seems to imply that something is being re-presented to some inner observer, rather than the underlying models used in the process of observation (or imagination as the case may be). But like so much terminology, it seems like we're stuck with it for historical reasons.

I wonder about the difference between 'misrepresentation' and 'misrecognition' here.

My apologies if I've missed something you've already covered (I've been very busy, and have not caught up.)

But: I'm reminded of an Aboriginal bloke I got to know rather well up north of the Daintree. I'm pretty damned sure he had a good 'representation' of "a crocodile" in his head. He also had a good representation of "a log". His trouble was not 'representation' it was 'recognition' - and the difference could be life-changing or life-ending.

He showed me a lovely, gorgeous, inlet with cool-looking waters on a hot day. I asked him: "how would you know if it's safe to swim there?" - he answered "I'd only trust it safe as the old women told me it was safe".

He was in his 40s, and *very* familiar with the area. Even then, he wouldn't trust his own recognition - only the old women. He told me "I had mates who wouldn't wait for the old women. Croc got 'em. I'll wait."

That was my first thought when I read about "dogs" vs "watering cans"...