How to Talk to Aliens

The Hitchhiker's Guide to Symbols

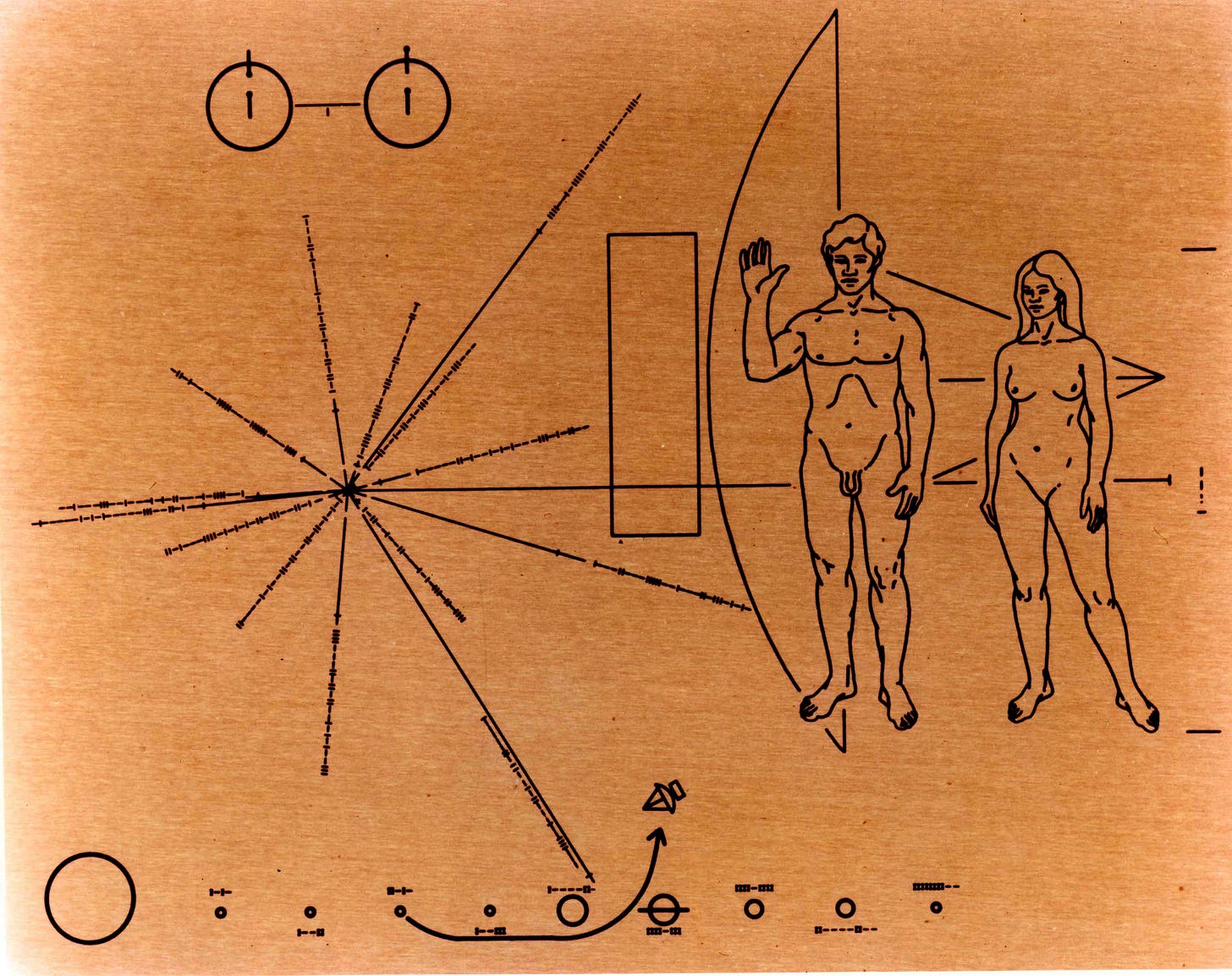

In 1972, when NASA launched the Pioneer 10 space probe, they sent it off with a strange looking metal plaque.

The plaque was engraved with pictures and symbols. It showed a schematic of the hyperfine transition of hydrogen, a diagram of the solar system with the planets in order of distance from the Sun, the spacecraft’s outbound path, and a starburst pattern. But the largest image was a line drawing of a naked man and woman, with the man raising his hand in greeting.

Why attach this plaque to a spaceship?

Well…

Maybe one day, far beyond our solar system, some alien life form will stumble across this plaque. Maybe the alien life form will be intelligent. And maybe they will look at the plaque. And maybe they will study the images. And maybe they will realise there is (or was) intelligent life on another planet with scientific knowledge, and peaceful intentions.

That’s the hope behind this plaque. It’s a kind of message in a bottle.

The idea of pinning this message to NASA’s Pioneer 10 (and later Pioneer 11) didn’t actually come from NASA. It came from the British science writer Eric Burgess. After attending a talk by Carl Sagan, Burgess approached him and asked, in effect, “If this Pioneer 10 probe is leaving our solar system forever, shouldn’t we send it off with a postcard?”

Sagan, who had been lecturing about how we might communicate with intelligent life forms, was instantly hooked. He pitched the idea to NASA, and — astonishingly — NASA said yes.

This was just three weeks before launch. Sagan had to act fast.1

He teamed up with his wife at the time, artist Linda Salzman Sagan, and astrophysicist Frank Drake.2 Together, they sketched out a design for the plaque.3

Their thinking was simple but bold: science might be a kind of universal Rosetta Stone— a shared understanding that could unlock meaning even between totally different kinds of beings. Aliens might not share our language or culture, but if they exist, they would live in the same physical universe we do. And physics, the team hoped, could bridge the communication gap.

So they chose symbols that pointed to features of the universe they believed would be the same everywhere. Hydrogen — the most common element in the cosmos — would always behave the same way. Stars called pulsars would always blink in steady, predictable patterns.4 And the layout of our solar system — our Sun and its planets —would always have the same shape and order, no matter who was looking.5

In other words, they were betting that physics is universal — and that any intelligent beings who stumbled across this little probe would be able to recognise the patterns and figure out where it came from.

That’s a hopeful bet — because even if physics is the same everywhere, interpretation might not be.

I remember learning about this plaque when I was a kid. And I remember wondering — what the heck were they thinking!? Why would we want to tell aliens exactly how to find us? It seemed like a crazy idea to me, and a little bit scary. It felt like we were giving a stranger our home address and just hoping they turned out to be friendly.

But as an adult, a different question comes to mind:

What would an alien need to understand this plaque?

Apart from the obvious — like having the kind of body that could physically interact with the plaque — these aliens would also need a certain way of thinking.

They’d need to understand the idea of a representation.

Why? Because that’s what this plaque is full of: representations.

The sketch of the man and woman is meant to represent human beings. The line-up of circles at the bottom represents our solar system. The raised hand is meant to represent a gesture of friendliness. And the little diagram in the top left — two circles connected by a line — represents a fundamental unit of time and length.

In other words, the aliens would need to understand that the marks and shapes on this plaque stand in for something else — something that isn’t just the marks and the shapes.

The trouble with representations

To state the obvious: the naked sketch of the man and woman is not actually a man and a woman. Understanding that a few lines and marks stand in for real human beings is not a trivial thing.

But let’s say an alien did grasp the idea that some things can represent other things. The next hurdle would be realising that these particular lines and marks were representations — that they weren’t just random grooves or decorative etchings.6

And even if they understood that, they’d also need to recognise that these representations were meant to communicate something.

If they managed all that, they’d face yet another puzzle: figuring out what kind of representations they were looking at. That the drawing of the hyperfine transition of hydrogen was a scientific diagram, not an abstract doodle. That the figures of the man and woman represented living beings, not maps, or blueprints. And maybe trickiest of all, they’d need to interpret the raised hand as a friendly wave — not as a signal of impatience, aggression, or some strange anatomical quirk.7

It’s starting to sound like a complicated task, isn’t it!?

Imagine you’re an alien who stumbles across this plaque. Would you know that the shapes on it were symbols meant to represent something else? How would you know that? And even if you grasped that basic idea, how would you figure out what they were representing? The marks could be read in endless ways — as decoration, as random scratches, as technical diagrams. There’s nothing in the marks themselves that tells you what they mean.

The symbols on this plaque mean something to you and me. But why do they mean something to us? What is it about these representations that allows them to be meaningful for us? And how could they become meaningful to someone (or something) else?

This is the puzzle of representations

We humans rely on representations all the time. In fact, once you start looking for them, you realise they’re everywhere.

Words are an obvious example. We use the letters d-o-g to stand in for a real dog out in the world. Pictures work the same way — a drawing or photo of a dog represents an actual dog.

But representations don’t stop there. Road signs are another example. A red octagon almost universally means Stop, even though there’s nothing about that shape and colour that necessarily means stopping.8

Gestures are representations too. Depending on where you are in the world, a thumbs-up might mean approval, agreement, or perhaps a rude insult.

Representations are so central to how we communicate and navigate the world that it’s easy to overlook just how important they are.

One popular and influential theory of the mind — both in contemporary analytic philosophy and cognitive science — centres around the idea that the mind works by creating and using representations.9

Aptly, this theory is called representationalism. I’ll say more about representations and representationalism in an upcoming essay, but for now note that, in cognitive science, patterns of neural activity are often considered the vehicles of representation.10

When a dog comes into view or you hear the word dog, there is a certain pattern of neural activity that goes on in your brain. This pattern of activity is said to represent the dog.

Because representations are baked into how we communicate, how we make sense of the world, and how we think, we rarely stop to consider how puzzling they really are.

But it’s worth pausing to think about it.

There’s nothing about the letters d-o-g that naturally points to a furry, tail-wagging creature. Without somehow linking d-o-g to something else (like an actual dog), those letters have no meaning.

But what makes a representation about something?

Last week we bumped into this worry in Searle’s famous Chinese Room thought experiment.

Searle described the situation as syntax without semantics. To remind you: syntax is all about form — for example the shape of letters and how they’re ordered or structured. Semantics, on the other hand, is about meaning — what those letters actually represent.

Searle’s argument is that digital computers have syntax, but no way to gain semantics. For an AI, he says, symbols aren’t linked to anything in the world. In fact, he argues that calling them symbols is misleading, because for a symbol to be a symbol it must symbolise something.11 And for a representation to be a representation it must represent something.

As we discussed last week, Searle’s ideas are controversial. Critics argue that he sets up a false divide between syntax and semantics.12

But regardless of where we land on Searle’s argument, there’s a question here that is worth addressing: How do marks on a page, scratches on a plaque, and patterns of neural activity in our brain come to represent anything at all? How do these things get connected to other things in the world?13

Or, as philosophers like to ask:

How are representations grounded?

Searle’s Chinese Room might make it seem like this puzzle — how symbols get their meaning — is a problem unique to digital computers. But is that really the case? Or do we face a similar challenge ourselves?

According to representationalism, certain patterns of neural activity in our brains are just that: representations. But what gives us the right to call them that?

There’s nothing inherently dog-like about a particular pattern of neural activity. A burst of spikes in your visual cortex doesn’t come with a label that says dog.

So how does that pattern represent dog?

One tempting answer is to imagine an inner observer — something inside you that reads the spikes and interprets them. But that just pushes the problem back a level. Who — or what — is interpreting the interpreter?

The other extreme is to say the spikes are just noise. Meaningless firings. But that won’t do either. Those patterns do correlate with real things in the world. When a dog comes into your visual field, something reliable happens in your brain.

So it might seem like we’re stuck between two bad options: either there’s a little homunculus in your head reading the signals, or the signals are meaningless.

But that’s a false dichotomy. There are other possibilities.

From a physicalist perspective, somehow, without a little man inside our heads, we point to patterns in a brain and say they’re about something in the world?

But how? What gives these patterns their aboutness?

That, in a nutshell, is the grounding problem, and it’s exactly where I’ll pick things up in next week’s essay.

In Sagan’s memoir essay “A Voyager’s Greetings” (in Murmurs of Earth, 1978), Linda Salzman Sagan gives a lovely backstage account.

For a short Science report on the design: https://astro.swarthmore.edu/astro61_spring2014/papers/sagan_science_1972.pdf

Artist Jon Lomberg also contributed line-art refinements, though his name is often omitted in brief summaries.

This in in reference to the starburst pattern, which shows 14 pulsars with their pulse periods in binary; because pulsar periods are highly regular (though they slow over millennia), they form a “cosmic GPS.” I’ve taken some liberty here describing them as if they “blink in steady, predictable patterns”, but technically the blinking is radio pulses rather than visible flashes.

Strictly speaking, orbits change over millions of years; but they are stable on the timescale relevant to an interstellar postcard — but not forever.

These statements might be perfectly defensible, but it is important to note that they are a philosophical inference, not a factual claim.

Many cultures (and some primates) use open-hand displays as a sign for surrender or threat.

While writing this essay, I learned that the shape and colour of stop signs is standardised by the Vienna Convention on Road Signs (1968), although not all countries have adopted it.

Other frameworks question the very idea that cognition hinges on internal symbols. Enactivists see mind as an organism’s skilful engagement with its world (Varela et al. 1991); behaviourists explain behaviour without postulating inner content (Skinner 1953); and eliminativists predict that talk of mental states like beliefs and desires will vanish as neuroscience advances (Churchland 1981).

What makes neural activity a representation is an important question — one we will return to in a future essay.

Searle’s makes a distinction between intrinsic vs derived intentionality.

e.g. see, Daniel Dennett’s True Believers (1981) for intentional-stance reply

We need to tread carefully here. Neural patterns and public symbols might seem to pose the same puzzle — but lumping them together too quickly could be misleading.

When it comes to communicating with aliens, I suspect when we finally do encounter them, we'll discover how impoverished our imagination has been on what forms life can take. We all live in the same universe with the same laws of physics, but those laws probably allow wider variation than we can imagine based on our experience of one biosphere.

But I think the Pioneer plaque isn't aiming to communicate with just any lifeform, but one that has managed to build their own civilization, in some manner close to how we define "civilization." That implies a level of selection to get to that point, one that it's hard to imagine happening without some form of symbolic thought and communication, or at least the ability to recognize significant patterns. But I am aware that even saying that may reveal a poverty of imagination.

I see the grounding of internal representations being about causal chains, from the represented to the representation, and the way it's used by the system to act toward the represented. To me, that's what Searle overlooks. That this is an issue for both engineered and evolved systems. There's nothing about individual neurons or even neural circuits that have meaning. They only have that meaning as part of their larger causal context. The same is true for code running in a computer system.

Interesting discussion, as always Suzi!

Ah, cliff-hanger ending. Just as it getting good! 😄

Other comments have touched on excellent points, so I'll just tender some random thoughts that occurred to me while reading…

The odds of any putative alien species finding the Pioneer probes (or the Voyager probes with their Golden Records) are about as close to nil as can be. Space isn't just big or even huge, it's vast beyond comprehension. Pioneer 10 is ~136 AU; Pioneer 11 is ~115 AU. Voyager 1 is the most distant at ~167 AU, and Voyager 2 is ~140 AU. For reference, the Oort cloud surrounding the Solar system extends from 1,000 AU to over 100,000 AU. So, all four probes have barely left the neighborhood. They have many millions of years before they'll reach the closest stars.

One thing about the Solar system diagram: Saturn's rings aren't forever. They're thought to be roughly 100 million years old (so very recent in terms of the Solar system) and may only last another 100 million years. I agree with what others have said about the raised hand on the humanoid male. (My question is why only the male?) There is also that just because life on Earth comes in two sexes, this doesn't mean life evolved on other worlds would necessarily find the concept meaningful.

I do agree with what's been said about any spacefaring species capable of finding these probes (in many millions of years) would almost certainly need language capable of accumulating the knowledge necessary and of communicating between workers involved in space projects.

Very true about advertising our presence! There is the "Dark Forest" notion that smart species do not announce their presence. But that assumes a galaxy teaming with, not just life, but hostile life. (The "Three Body" trilogy by Liu Cixin is all about this.) But there's a simple way to consider the odds of intelligent life that puts the odds at ~10²⁴ to 1. Compare this to the 10¹¹ stars in this galaxy or the 10²² stars in the visible universe, and it seems that we might be rare indeed. Odds are that we're alone in this galaxy.