What Musk's Neuralink Will Actually Do

on hype and science

Hello Curious Humans!

For better or worse, Elon Musk seems to consistently find himself in the spotlight. Given that he’s the CEO of six companies, I guess that’s not too surprising. But let’s not discuss lawsuits, the Twitter saga, or whether Musk is still the richest person in the world. I’m happy to leave those topics well alone. Instead, let’s discuss what happened about ten weeks ago, in late January.

Musk announced (via X, of course) that the first human had received an N1 chip from Neuralink, his brain-computer interface (BCI) company. The news gained some buzz, especially within the medical and neuroscience fields.

Neuralink was not the first to implant a chip into a human’s brain. BCIs similar to Neuralink's system have previously enabled people with limited limb function to control a computer mouse and keyboard, operate a robotic arm, and navigate a wheelchair, all by using only their thoughts.

But Neuralink's ambitions go beyond just restoring lost limb function. Musk has suggested the technology could also return sight to the blind, restore hearing in the deaf, and tackle a range of conditions, including depression, anxiety, insomnia, chronic pain, seizures, addiction, and brain damage. Musk envisions Neuralink will also enable the uploading of thoughts to a computer or the downloading of new information into the brain so that humans will merge with artificial intelligence.

How realistic are these ideas? Are these possibilities a vision of our future, or are they fantastical sci-fi imaginations only to be found in movies?

This week in When Life Gives You AI, we’re asking 3 questions:

What is Neuralink, and what does it do?

How does Neuralink’s BCI system work? and

Will Neuralink fix everything and create AI-enhanced superhumans?

1. What is Neuralink, and what does it do?

Neuralink is a company that has developed a chip — the N1 chip — that can be implanted into the brain. This N1 chip works like a sensor that reads electrical signals from the brain. The chip itself is about the size of a US quarter with 64 highly flexible, ultra-thin threads. Each thread has 16 electrodes — so in total, the Neuralink chip records from 1024 electrode sites. Neuralink also developed a surgical robot called R1 that implants the chip. Yep — the robot does the surgery.

We don’t have much information about the first human to receive the Neuralink device, but here’s what we do know:

To qualify for a Neuralink chip, you must have limited or no ability to use your hands. This loss of function must be due to a spinal cord injury or ALS. The main concern here is that the brain is not damaged. The lack of limb function needs to be due to something outside the brain — so not a brain lesion or brain disorder.

In terms of the surgery, we know that a small part of the skull (the same size as the chip) was removed. Then, the R1 robot delicately inserted the threads into the brain's motor cortex. The choice to insert the chip into the motor cortex was not random — this is an area of the brain that is highly active when thinking about and planning movement.

Once the threads were inserted into the motor cortex, the chip was placed into the precisely made hole in the skull, fitting flush against it. This means that, following recovery, the chip will be completely invisible.

The day after the surgery, Elon posted the following update:

Neuron spike detection means the electrodes can read activity from nearby neurons, and the computer can record this activity.

The N1 chip and the R1 robot are impressive feats of engineering that deserve more attention — but understanding the engineering is not necessary for today’s article, so let’s leave that discussion for another day.

2. How does Neuralink’s BCI system work?

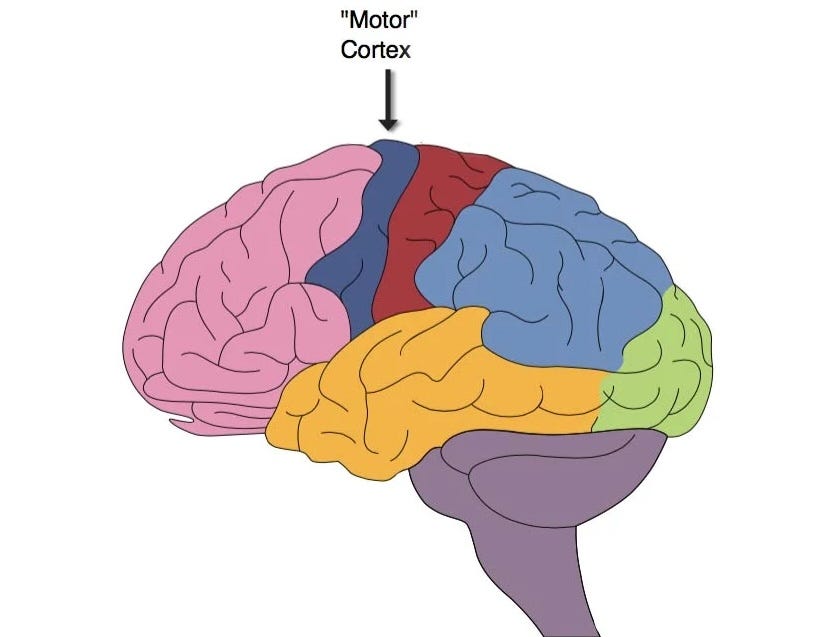

To understand how Neuralink works, we need to understand how the brain works — well, not the whole brain, just the motor cortex.

The motor cortex

The motor cortex (also known as the primary motor cortex) is a strip of cortex that is primarily involved in planning body movement.

The great thing about the motor cortex is that it is mapped to the body. You may have seen images like the one below before — indeed, I included an image like this in my article on the Homunculus Fallacy. The image below is a cross-section of the brain from ear-to-ear.

You’ll notice a few things about this image.

Different body parts are mapped to specific areas of the motor cortex.

The body seems to be upside down—the legs and feet are at the top, and the face is below.

Not only is the body mapped upside down, it is also mapped left-to-right. The right side of the body is mapped to the left side of the brain, and the left side of the body is mapped to the right side of the brain.

Some body parts seem to be overrepresented. For example, the hands and face are much bigger than actual hands and faces.

Neurons in the motor cortex change their firing rate — that is, they send more, or perhaps a different pattern of, signals — when we plan a body movement. For example, if you plan to move your right thumb, an area of your left motor cortex (about an inch or two above your left ear) will start to change its signals. This change in firing rate happens not just when you actually move your thumb but also when you think about moving your thumb.

This is essentially what Musk was talking about when he mentioned detecting neuron spikes in the patient — they were able to read the firing rates of neurons near the electrodes implanted in the motor cortex. We don’t know for sure, but the N1 chip was most likely implanted into the hand area of the left motor cortex (assuming the patient was right-handed).

Monkey Ping Pong

Before Neualink implanted an N1 chip in a human’s brain, N1 chips were implanted in the brains of non-human animals, including a macaque monkey.

Before the chips were implanted, the monkey learned to play a game of computer ping-pong. The monkey moved the paddles into the correct location by moving a joystick. Each time the monkey made the correct move, he received a sip of banana smoothie.

After the N1 chips were implanted, the monkey played ping-pong like he did before. But now, the N1 chips detected the neurons firing in the monkey’s brain. The firing rates from all 1024 electrodes were streamed wirelessly to a decoder. Over many trials, the decoder learned how different patterns of firing rates relate to different paddle movements.

Once the decoder learned which brain patterns related to which paddle movements, the researchers unplugged the joystick and let the decoder do the work of moving the paddle.

Let’s unpack this. Before the N1 chips were implanted, to move the paddle, the monkey’s brain would send signals to the monkey’s hand, the hand moved the joystick, and the joystick moved the paddle.

Brain → Hand → Joystick → Paddle

With the N1 chips in place and the decoder doing its thing, the brain signals were sent to the decoder, and then the decoder sent signals that moved the paddle, elimminating the need for a hand or a joystick.

Brain → Decoder → Paddle

So, the monkey played ping-pong simply by thinking about moving the paddle.

Restoring lost function

Imagine how the Neuralink system might help someone with quadriplegia. By having a Neuralink chip implanted, such individuals could regain the ability to use a computer by moving a cursor, clicking buttons, and typing —simply through their thoughts. The life-changing autonomy this technology could offer is undeniable.

Is Neuralink’s news of implanting a chip into a human brain ground-breaking?

Well, no, not exactly. The first BCI device to be implanted into a human brain happened more than 25 years ago. The patient, a man who had developed locked-in syndrome, had a chip implanted that allowed him to control a computer.

Since then, implanted BCIs have helped people who have lost limb function to control devices such as computers and robotic arms. There has also been some work on using BCIs to restore lost vision, but as we will explore below, restoring vision faces greater challenges than restoring limb function.

3. Will Neuralink fix everything and create AI-enhanced superhumans?

Most implantable BCI systems are designed to restore lost motor functions. This includes enabling individuals to move artificial limbs or facilitating communication with just their thoughts. There’s good reason for focusing on lost motor functions. The mapping between the motor cortex and the body is relatively straightforward. When the patient thinks about moving their body in a specific way, corresponding areas in the motor cortex change their firing rates.

What about vision? How does vision work in the brain?

Restoring vision using BCIs is tricky for three main reasons:

1.

The first thing to notice is that when it comes to restoring lost function using BCIs, the approach differs significantly between restoring lost motor function and restoring lost vision. Restoring lost motor function is an "inside-out" process — the decoder needs to interpret the patterns of neural activity that happens in the brain and then translate those patterns into commands for an external device. On the other hand, restoring lost vision is an "outside-in" problem. The BCI must get visual information into the brain so that the brain can understand and process that information.

2.

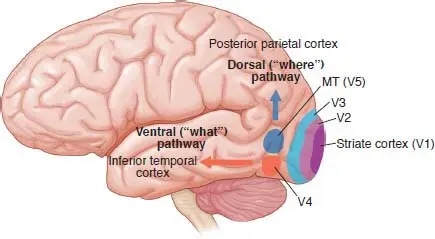

The second tricky problem is that, unlike the motor cortex, vision is not so straightforwardly mapped in the brain.

Visual information follows a pathway from the retinas at the back of our eyes, along the optic nerves, and eventually reaches the visual cortex. But, once in the brain, visual information is not processed in one location. Different aspects of vision, such as line orientation and colour, are primarily processed in distinct areas like V1 for basic visual information and V4 for colour.

The visual information processed in the visual cortex is then segregated into two distinct pathways, commonly called the "what" and "where" pathways. The ventral stream, or the "what" pathway (red in the image above), is responsible for object recognition. For example, recognising faces is primarily handled in the fusiform face area and recognising houses is primarily handled in the parahippocampal place area. On the other hand, the dorsal stream, or the "where" pathway (dark blue in the image above), is involved in processing where things happen in the world, which gives us the perception of the location and movement of objects we see.

3.

The third tricky problem is that our eyes are rarely still. About five times per second, our eyes make small movements to scan our environment. If our brain directly and simplistically mapped information from our retinas without any additional processing, the world would seem to whirl and shift every time our eyes moved. If we think of the eyes being a bit like a lens in a camera — and that lens shifted every 200 milliseconds — the world would be an unwatchable mess. But we see the world as if the camera lens is stable.

It turns out that some parts of the visual cortex are mapped according to the layout of the retina. But other parts are mapped according to the spatial relationships in our visual world. Any BCI chip implanted into the visual cortex must consider how the brain maps visual information.

The State of BCI Vision

Most work on restoring lost vision has focused on implanting electrodes into the retina (at the back of the eye). A camera is attached to a pair of glasses which sends signals to the implanted electrodes stimulating the retina. By directly stimulating the cells in the retina, these implants work to mimic the visual input just as they would during normal, healthy vision. This type of BCI could help people who have damage to their retina but retain some undamaged cells. Because the brain’s ability to process visual information is formed through the experience of seeing, these patients must have been able to see at some point in their lives. The benefit of this approach is that it leverages the brain's existing visual pathways, so the complexity of visual processing is left for the brain to sort out. The downside of this type of technology is that it does not work for people who were born blind or people with extensive damage to the optic nerve or the visual cortex.

Patients who have received retinal implants can perceive light, shapes, and even some patterns, which is a significant step forward. However, the quality of the vision restored is not like normal healthy vision. They can usually make out outlines and high-contrast edges, which can be extremely helpful. However, further research is still required to understand how the brain processes the signals from retinal implants, as this knowledge is important for understanding how higher-resolution vision and more intricate visual details might be achieved.

An alternative strategy is to bypass the eye and optic nerve entirely and implant electrodes directly into the visual cortex. While more invasive, this cortical approach could restore vision without a functioning optic nerve or retina, but it might run into other problems that arise because of the complex way that the brain processes vision.

Electrodes directly stimulating the visual cortex cause the patients to see flashes of light called phosphenes. These flashes of light can be organised into patterns that might represent visual information, but early research has shown that while it is fairly easy to have patients see flashes of light, producing specific visual perceptions like specific shapes or colours is more difficult. While bypassing damaged eyes and optic nerves theoretically sounds promising, achieving a meaningful and coherent visual experience is tricky — more tricky than restoring vision by stimulating the retina.

What about memories? Could we download or upload memory?

Let’s venture into more controversial territory!

Compared to the nice, neat, well-defined motor cortex mapping, memory is extremely knotty. Memory does not have a neat, localised mapping that can be easily targeted with electrodes to retrieve or upload memories. Perhaps because of its complexity, memory is not as well understood as motor preparation signals. What we do know is that memory involves widespread brain areas. Plus, most researchers think that memory in the brain is not recalled like memory in a computer. Instead, each time we remember something, we reconstruct the memory. This complexity makes BCIs for memory far more challenging than those used to replace motor functions.

What about uploading new information into the brain?

I would love it if one day I could upload a quantum mechanics module into my brain so I could finally understand quantum entanglement.

Let’s explore what would be involved in making this sort of technology work.

Similar to restoring lost vision, uploading new information is an “outside-in” problem. We would need to understand how the new knowledge could be encoded by the brain. What are the specific patterns of activity that correspond to understanding quantum entanglement? Currently, our understanding of how abstract concepts, like quantum mechanics, are mapped in the brain is very limited.

Plus, when it comes to abstract concepts, it is likely that individual brains are uniquely organised, even when people share similar knowledge. Successfully integrating new information into the brain would require a customised approach for each brain's wiring. But the brain is highly complex — with about 100 billion neurons and about 100 trillion connections.

Effective learning and memory consolidation rely on the brain's plasticity — its ability to strengthen or weaken the connections between neurons based on new information. Identifying which connections to enhance and which to suppress to produce an understanding of subjects like quantum mechanics is so complex that it is difficult to fathom. Without a detailed map of each individual's neural architecture and an understanding of how to manipulate it precisely, the dream of directly uploading knowledge into the brain seems daunting, to say the least.

The Sum Up

While BCIs have made remarkable progress in restoring lost motor function, the journey from restoring motor functions to augmenting human intellect is a monumental leap. Most cognitive neuroscientists believe the creation of superintelligence through BCIs likely remains an extremely distant prospect if it is achievable at all.

Wow. Absolutely loved this detailed analysis on Neuralink, what it really is, and what it's not.

Thanks, Suzi!

Your piece is a great companion to this long-read by Tim Urban from 2017: https://waitbutwhy.com/2017/04/neuralink.html

Tim's focus is on the bigger picture and how something like Neuralink gets us to a new paradigm in the way we communicate. He traces the history of human communication and talks about how language, despite being the best we've got, is actually an extremely slow and inefficient communication method. Then he moves on to speculating about how a future with direct brain-to-brain communication would look. It's a fascinating read, but I don't know enough about the complexities involved to evaluate which parts are realistic.

If you ever get around to reading it, I'd be curious to hear your take.

Also, now that I know that the term "fusiform face area" is a thing, my life is that much better!