AI Will Not Kill Science

But it might get messy

Imagine a world where artificial intelligence (AI) has become deeply integrated into the entire scientific process. It’s a world where AI assists (or perhaps entirely constructs) at every key point in the knowledge generation pipeline.

What would be the consequences of such a world?

To some, this would be an AI research paradise. Traditional scientific methods can be slow and laborious. But with AI, science would be streamlined and efficient. Scientists would collaborate with AI tools to speed scientific advancements.

Mining LLM databases would uncover previously unconsidered questions. And make connections humans would likely overlook without the bandwidth of generative AI. Experiment ideas would be plentiful. Predicted outcomes would be virtually simulated. And optimal parameters would be suggested before any of the costly physical implementations are executed.

Even the laborious task of peer review would be outsourced to AI bots. A study’s flaws and implications would be assessed in a fraction of the time it takes a human. Papers meeting AI-verified standards would be rapidly published.

In this AI-augmented scientific world, the pace of discovery is blazingly fast compared to the sluggish traditional, human-driven scientific process. New empirical findings and theoretical breakthroughs would accumulate exponentially as AI identifies new areas to investigate.

If this is our optimistic future, many say, sign me up!

But not everyone is convinced by such a rosy picture. To some, this AI-augmented utopia may ultimately represent a crisis for science.

In a recent perspective article published in Nature, Messeri and Crockett (2024) argue the enthusiastic implementation of AI tools across the research pipeline makes science less innovative and more error-prone. AI-augmented science, they argue, is the blueprint for developing scientific monocultures that lack diversity.

Are we headed for “a phase of scientific enquiry in which we produce more but understand less”?

This week in When Life Gives You AI, let’s explore the future of science in an AI world by asking four main questions:

What is diversity in science, and why is it important for progress?

How might AI reduce diversity?

How are scientists currently using AI to do science? and

Should we be concerned about science?

Regular readers will recall my recent post, Can AI Generate New Ideas? In that post, I mentioned an article I planned to publish but held off because a few days before, Nature published a perspective article covering similar issues. This is that put-on-hold article, although it’s changed considerably. I’ve taken some time to consider the concerns raised in the Nature perspective, and I outline my thoughts in question 4.

Before we jump in, I want to clarify the scope. In this article, when I mention AI, I mean the narrow type currently available to the public, not artificial general intelligence (AGI) — the theoretical future AI with human-like intelligence and the ability to teach itself. I did consider restricting this discussion to generative AI or, even specifically, large language models (LLMs). However, scientists use various AI technologies, not just generative AI, like LLMs.

1. What is diversity in science, and why is it important for progress?

When my daughters were small little critters, they discovered the joys of eating banana and cheese on toast. They eagerly inhaled slice after slice. For a few weeks, this was the only thing they ate. Their refusal to eat anything besides banana and cheese on toast came shortly after I informed them that their beloved banana was in crisis.

“What do you mean every banana is the same?" my eldest questioned, staring back at me. "But how is that possible?"

Feeling smug that I knew an answer to one of her seemingly endless questions, I answered, "Believe it or not, pretty much all the bananas we eat come from one single variety of bananas grown a long time ago. They've been cloned and replanted so much there's almost no difference between bananas now."

My youngest furrowed her brow. "Is that... bad?"

“Well, yes and no,” I said, getting a little more serious now. “The sameness makes farming and growing lots of bananas at once pretty easy, but because they are all the same, a new disease or change in the environment could mean trouble for bananas.”

When a species is genetically diverse, a pest or disease can damage or wipe out certain varieties with a specific genetic makeup, but other varieties with slightly different genetic traits resist the threat and survive.

For bananas, it would only take one perfectly evolved pest or disease to wipe out the world's banana supply. The lack of genetic diversity eliminates nature's most powerful mechanism for survival — the ability, as a species, to adjust to changing conditions through variation.

As genetic diversity helps a species remain adaptive, diversity of ideas and perspectives allows science to remain responsive to new evidence that challenges existing theories. A lack of diversity in the realm of ideas leaves science vulnerable to narrow thinking, biases, assumptions and stagnation. A monoculture of thinking cannot easily adjust to changing conditions.

Of course, not all ideas are good ideas. With a diversity of ideas, we might expect that along with some good ideas, there will be plenty of bad ones too. But a diverse range of ideas is precisely what is needed to increase the likelihood of finding the good ones — the ones that lead to true innovation.

2. How might AI reduce diversity?

Why might the authors of the recent Nature perspective claim AI-augmented science risks turning science into an overly insular monoculture of knowledge? How might AI reduce the diversity of ideas?

The authors base their argument on three specific illusions of understanding that are heightened when AI is used in scientific inquiry.

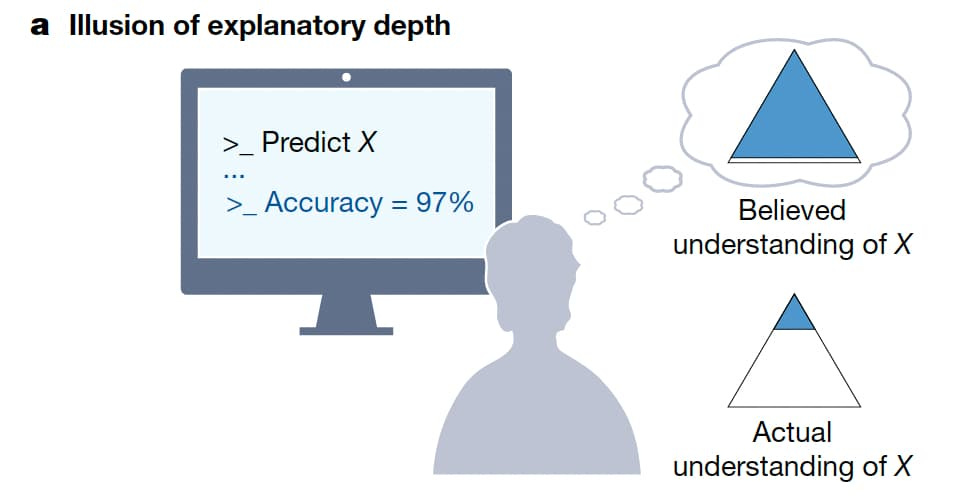

The illusion of explanatory depth

Let’s imagine an ecologist named Hazel. Hazel studies wildlife migration patterns. Over a five-year period, Hazel collects migration data from a few dozen caribou herds and climate data.

After collecting the data, she builds a machine-learning model that predicts caribou herd migration patterns with 97% accuracy. With such a high accuracy score, Hazel believes her model provides a nearly complete understanding of caribou migratory behaviour in response to climate factors.

But Hazel is wrong. The model’s 97% accuracy score is only relevant to her data and the model she used. The data is limited by caribou numbers and climate data for a specific region over a specific time. And the model’s architecture is simplified compared to real-world complexity.

Hazel believes she understands the real-world migratory behaviour of caribou in more depth than she actually does. She has fallen for the illusion of explanatory depth (see Figure 1).

This illusion of understanding is exacerbated because Hazel does not understand how AI models work. Thinking AI is a pretty clever tool, Hazel is overly trusting in the results her clever model produced. Trusting in the findings and thinking she has a near complete understanding, she doesn’t seek other viewpoints, challenge any of the model’s assumptions, or even consider contrasting theories.

The illusion of exploratory breadth

Imagine after Hazel successfully publishes her work on caribou migration patterns, other ecologists are inspired to use similar AI models. These other ecologists ask questions and make similar investigations into animal migration patterns based on similar machine-learning models.

This creates an echo chamber. The successful publication of work like Hazel’s leads other researchers to look for other questions that can be answered using similar methods. As more ecologists converge on asking questions and performing science that can only be answered using AI tools, a homogenization of research questions and methods develops.

The scope of inquiry narrows, and the potential for groundbreaking discoveries or paradigm shifts diminishes.

The ecologists have fallen for the illusion of exploratory breadth. They falsely believe AI can test and explore all possible hypotheses when, in fact, the world of testable hypotheses with AI is narrower in scope (see Figure 2).

The illusion of objectivity

Even when trying not to be, scientists (like all humans) will be biased. These biases will influence the questions they ask, how they frame their findings, and the future research they choose to conduct. Recently, science has made efforts to be more diverse — demographically, ethically, and regarding gender representation. Science is not perfect in this regard, but it has made strides.

A move towards a more AI-augmented science might risk a regression back to a homogeneous science. Scientists might be vulnerable to the illusion of objectivity, in which they falsely believe AI represents all possible standpoints, when in fact, generative AI, such as LLMs, are limited by the views in their training data and by other factors related to how they are designed (see Figure 3).

While LLMs are capable of generating a variety of responses (sometimes even incorrect hallucinations), they are designed with a bias towards being predictable. They are designed to calculate probabilistic predictions of the next word or phrase. This predictability might come at a cost to diversity.

LLMs are designed to provide responses that are reliable, statistically likely, and contextually appropriate based on what we might find in their training datasets. Without this inclination towards predictability, the outputs would often be erratic, less useful, or even nonsensical and wrong. When we do get less predictable results from LLMs we often claim it is hallucinating. To ensure their usefulness, LLMs lean towards predictable and reliable responses over diverse and creative ones.

Predictable is not bad. Often, predictability and reliability are exactly what we want. But other times we need diversity — out-of-the-box thinking — we want something orthogonal to what we already know. True innovative scientific breakthroughs — from Copernican heliocentrism to Einsteinian relativity — happen at the tails of the distribution — they are the outliers, the mavericks, the unpredictable.

Without exploring the possibility of diverse ideas, we might be left stagnating in mediocre groupthink. As biodiversity strengthens ecosystems, the diversity of ideas is fundamental to the health of knowledge generation. While the pursuit of diverse ideas may introduce noise or unproductive tangents, it is within this cacophony that the gems of discovery arise.

3. How scientists are using AI to do science

The scientific method generally follows five broad steps:

hypothesis generation and study design

Data collection

Data analysis

Interpretation of results and writing the report, and

Peer review

Let’s examine each of these five steps and review how scientists are currently using AI at each step in the scientific process.

Hypothesis generation and study design

The first step in the research process is hypothesis generation and study design. In this stage, researchers spend many hours reading other academic research studies.

Consider again Hazel, our ecologist. Hazel is a dedicated researcher, but she is overwhelmed by the amount of academic research she is required to be across. She turns to an LLM to help review the literature on caribou migration patterns and how they might relate to the most recent findings on climate science.

While the LLM might efficiently condense relevant studies, it is designed to provide Hazel with predictable, reliable, and statistically likely answers. At this stage of the scientific process, Hazel needs to know the predictable answers, but she must also consider the unpredictable.

Hazel’s reliance on AI in this stage narrows her research focus to the well-trodden science the LLM deems most relevant and predictable (assuming, of course, the LLM has provided accurate responses).

Hazel mistakenly believes she has a comprehensive understanding of past research in her field, but in fact, her understanding is narrow compared to the distribution of ideas in her field. Based on this narrow understanding, she formulates a narrow set of hypotheses.

Data collection

The second stage in the scientific process is data collection. Using AI as a substitute for real-world data has generated excitement for some scientists, especially where traditional data collection methods are either too costly or impractical.

For instance, in psychology and the social sciences, researchers are using AI to simulate human participants. Digital participants, arrive on time, perform the task as requested, do not fatigue, or need to be incentivised to perform the task like human participants do. They also have the potential to provide a broader spectrum of human experiences and perspectives than the traditional approach, which often involves exclusively testing university undergraduate students.

Our ecologist, Hazel, might turn to AI to help generate data for her research. Caribou travel long distances—in fact, their migration is the longest land migration on the planet—and their migration spans many different and sometimes subtle environmental shifts that may affect their routes.

Collecting comprehensive, real-world data is a significant logistical and financial challenge. So Hazel again turns to the power of AI for help.

Hazel employs sophisticated AI models to generate synthetic data on caribou movements. These models are trained on a blend of satellite imagery, historical migration data, and climate change indicators to simulate how varying environmental conditions might alter caribou pathways. This approach allows Hazel to explore various scenarios, including extreme weather events and gradual habitat changes, without needing to do expensive fieldwork.

While using AI to generate data promises to revolutionise data collection, the concern is that AI might inherently narrow our scientific understanding. This narrowing could arise because AI-generated simulations from existing data are simply that —simulations of previously known data. Simulated models may replicate or even amplify existing biases and limitations in the data.

Data analysis

Following data collection is data analysis. Many scientists are using computational analysis and modelling to analyse large datasets. For example, in biology, AI is being used to classify proteins and cell types, which is a time-consuming task when done without the power of AI. Well-built models can identify patterns that would be difficult, perhaps even impossible, for researchers to find when using traditional methods alone.

However, as discussed earlier, there is a risk in relying on AI modelling in science when researchers lack an understanding of the limitations and capabilities of AI models. All models (AI-generated or not) are, arguably, oversimplified versions of reality.

A lack of understanding of how AI models work may exacerbate the problem. Because AI models appear complex, they may be perceived as representing more complex phenomena simply because they are difficult to interpret and not because they are capturing the full complexity of the data.

Writing and interpretation

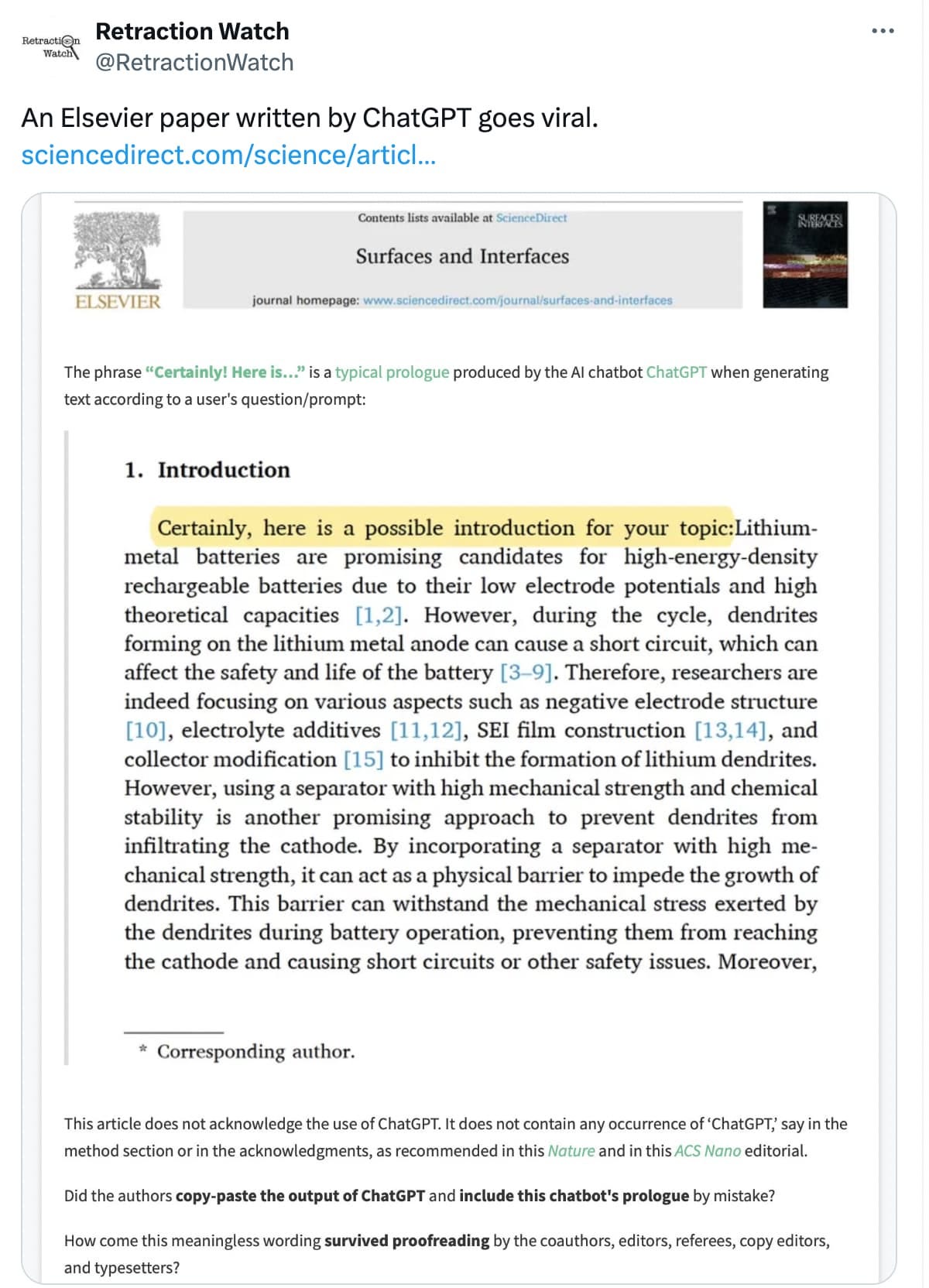

Once data analysis is complete, scientists turn to interpreting their findings and writing manuscripts for publication. Many researchers use LLMs like GPT-4 to assist with structuring and writing these reports, significantly reducing the time and effort required for this task.

In a 2023 Nature survey of over 1,600 scientists, ~30% reported using generative AI to help them write manuscripts. And we are finding phrases like “As an AI language model” and “As of my last update" in scientific writing.

LLMs may not be the most appropriate tool for writing scientific manuscripts. These models may not be optimized for the nuanced style of writing often required for science. To avoid nonsensical outputs, LLMs are designed to favour more straightforward, acceptable ideas over complex and nuanced ones. While science manuscripts might require some straightforward well-known ideas, most will also require the conveying of novel ideas that are often complex and nuanced, with subtle distinctions and specific details.

Peer review

Once the manuscript is written, they are sent to journals for publication. Before a manuscript is accepted (or rejected) for publication, the journal sends the manuscript to three independent scientists working in the research area. These reviewers review the manuscript and provide comments, critiques, and recommendations.

Although scientists are not paid to review manuscripts, they are expected to volunteer their time to do so. Reviewing manuscripts is a time-consuming job, especially if it is done well. So, many are turning to LLMs to help them efficiently complete the review process.

But as we have already discussed, LLMs might lack the ability to judge the true novelty or significance of the science. Employing LLMs to assist with or even replace peer review could undermine the main purpose of this process. What is peer review without peers?

Ideally, reviewers are experts with a deep understanding of the complexity and nuance being discussed and are willing and able to provide insights. Peer review, as it is traditionally done, is not a perfect system, but it’s a step toward limiting published articles to well-substantiated and meaningful science.

There are concerns LLMs are more likely to oversimplify or even misinterpret the manuscript than to provide insightful comments. If reviewers are susceptible to the illusion of objectivity — falsely believing responses from LLMs represent all possible standpoints — they may provide reviews that overlook more nuanced critiques and diverse perspectives only an expert in the field, dedicated to the peer review process, can provide.

4. Should we be concerned about science?

If you spend any time using GPT-4, Gemini, or even the new Claude 3, their limitations become glaringly obvious. Try to get an LLM to write anything nuanced or comment on a specialised area that requires rare expertise; it fails terribly. These limitations are not lost on all scientists.

Sure, there was a time when LLMs were new and shiny, and we thought bot-written content would pass as human-written. Or perhaps even better than human. But a year and a bit after ChatGPT was introduced to the world, not all of us remain so impressed. Last week Substacker

published an op-ed in the New York Times on how A.I.-Generated Garbage Is Polluting Our Culture. Many resonate with Erik’s concerns.Science is designed to be self-correcting. It works because claims made in science are meant to be challenged. Scientists try to find flaws in other scientists’ work. It sounds hostile, but it’s why science works. We publish our work and present it at conferences, expecting other scientists will scrutinize our assumptions, methods, and reasoning. No, it’s not always fun. But we try to understand bad ideas should be weeded out and good ideas should be built upon. Science is a work in progress.

The early 2010s were a rough time for psychology. The replication crisis hit hard, making it painfully clear that many of what psychologists believed to be true findings... well, weren't. Researchers tried to replicate the classic psychological findings we all learn in first-year psychology classes, but they failed to do so. It became clear some of the classic psychological studies were the result of questionable research methods and a tendency to only publish findings that were in line with previous findings.

Psychology wasn’t destroyed by the replication crisis. But it did change.

Nowadays, we’re seeing better practices in psychological research, like larger sample sizes and better statistical practices. One significant change has been the introduction of pre-registration and registered reports, where researchers lay out their plans before they start their investigations. In the case of registered reports, the study is approved for publication before collecting data, which puts the focus on the methods and questions being asked and not the results of the study. These changes have not made psychology a perfect science. But this is science doing what science does best — it self-corrects.

Other scientific disciplines are now experiencing their own replication crisis. And, like psychology, they will be strengthened because of it.

If we are worried AI will destroy science, perhaps we should take a moment to reflect on human nature. Humans, like it or not, are often competitive. And scientists are no different.

When the replication crisis hit psychology, some researchers clung to their old, comfortable methods. These researchers soon discovered their work no longer met the rigorous standards of prestigious journals. When they presented their unpublished work at conferences, it was heavily scrutinised and criticised by other researchers. Other researchers saw the replication crisis as an opportunity. They were the ones who conducted the first replication studies, submitted the first registered reports, and were the first to implement better statistical practices.

We may not be surprised to find scientists, like our friend Hazel, who use AI tools to produce less innovative and error-prone science, but it should be equally unsurprising to see other scientists criticising that science and seizing the opportunity to do better science.

This is not to say better science is AI-free science. On the contrary, AI will and should have a place in the scientist’s tool belt. But knowing AI’s limitations is key to its successful use. A good scientist will know how to use the tools and which tool is right for which job.

Thanks so much for reading this article.

I want to take a small moment to thank the lovely folks who have reached out to say hello and have joined in the conversation here on Substack.

If you'd like to do that, too, you can leave a comment, email me, or send me a direct message. I’d love to hear from you. If reaching out is not your thing, I completely understand. Of course, liking the article and subscribing to the newsletter also help the newsletter grow.

If you would like to support my work in more tangible ways, you do that in two ways:

You can become a paid subscriber

or you can support my coffee addiction through the “buy me a coffee” platform.

I want to personally thank those of you who have decided to support my work financially. Your support means the world to me. It's supporters like you who make my work possible. So thank you.

Jeff Bezos has a great quote: “Be wary of proxies”

When you’re measuring a KPI, realize that that KPI is a proxy of an outcome you want to produce or measure.

Make sure you know what you’re measuring or want to produce. Often times we lose sight of that and just focus on the numbers vs the anecdotal and other evidence that may suggest we aren’t achieving our goals

Welp, there go my dreams of acquiring 17 PhDs in fields I know nothing about by ChatGPT-ing my way into science. Thanks for nothing, Suzi!

Yeah that finding of "As an AI language model" phrases in scientific texts doesn't instill confidence. That's potentially another downside of using AI extensivelly in research: reduced public trust due to (often simply perceived) lack of rigor. In a world where a not-insignificant percentage of people already believe that vaccines cause autism, polticians are lizard people, and Bill Gates is installing 5G chips into us via Covid jabs, anything that casts doubt on the scientific method is bound to do more harm.

Trust us people to take something with as much upside potential as AI and then use it in the laziest possible way.