The brain is a computer… or so they say.

During my undergraduate studies, I often heard the mind explained as the “software” that runs on the “hardware” of the brain.

But is this true?

Increasingly, researchers are starting to question whether the computer metaphor is appropriate. In fact, many argue it's not just wrong, but it’s causing problems. It’s getting in the way of understanding how the brain actually works.

But the brain-is-a-computer metaphor remains standard in neuroscience and artificial intelligence. And, the reasoning goes, if the mind really is ‘software’ that runs on the brain's neural ‘hardware’ — then it raises a provocative possibility that if minds are like computer software, complex computer software (like LLMs) might be like minds. Computers might (at least someday) be conscious.

When we hear talk like this, we might be inclined to point out the many ways that brains are, in fact, not like digital computers.

But while it is true that the list of differences between digital computers and brains is long, I suspect simply listing these differences is unlikely to convince anyone either for or against the possibility of conscious AI.

The question we really want to ask is:

Are the differences between brains and digital computers the kind of differences that truly matter when it comes to consciousness?

While the computational functionalist might lean towards answering, no — the biological naturalist would lean towards a yes!

Let’s find out why the biological naturalist thinks AI might never be conscious.

This week, we’re asking three questions:

Is the mind “software” that runs on the “hardware” of the brain?

Could biology be the difference that makes a difference? and,

What if we designed digital computers to function more like brains?

But first… a difference between philosophy and science

In this article, I will refer to a prominent scientific theory of consciousness. Before doing so, I want to highlight an important distinction between scientific theories of consciousness and philosophical ones.

In philosophy, much of the study of consciousness comes under the larger field called metaphysics. Metaphysics is interested in questions about the fundamental nature of reality. So, philosophers interested in metaphysics are interested in the broad question about what exists? When it comes to consciousness, philosophers might ask questions like, What is consciousness made of? or What are the essential properties that distinguish a conscious experience from an unconscious one?

Scientists, on the other hand, tend to have a different focus. Instead of asking what consciousness is made of, scientists tend to ask questions like, How does consciousness work? or What is the function of consciousness?

This difference in approach can sometimes lead to frustration. We usually want to define what we are studying in the what-it-is-made-of sense before addressing questions about how it works.

Scientists working on consciousness often respond to this frustration in one of two ways. They either say, let’s focus on the scientific questions and leave the philosophical questions to the philosophers. Or (if they are feeling a little braver), they might suggest that scientific discoveries about the function of consciousness could provide important clues and set essential boundaries for philosophical theories.

Q1: Is the mind ‘software’ that runs on the ‘hardware’ of the brain?

A persistent idea in computer science is the separation of software and hardware.

Software is the code—the programs that run on a computer. You can think of software as the functions a computer performs. Hardware is a computer's physical components, such as its processor, memory, and hard drive.

This separation has been critical to the development of computer science. Separating hardware from software allows software to be developed, copied, and run on many different types of hardware without too much concern about how the hardware is made. It also means those who develop the software can distribute it worldwide and still be confident that it will run the same way and produce the same results, no matter how many copies are made. And, it allows the same software to be run on multiple hardware simultaneously, which means processing massive data sets is possible.

Brains don’t work like this.

The brain's hardware (e.g. its neurons and neurotransmitters) cannot be easily separated from the functions it performs.

Every time our brain does anything — we solve a complex problem, learn a new skill, or process sensory information — our brain physically changes. Neurotransmitters are released into synapses that bind to receptors. New synapses are formed, or existing ones are pruned and reorganised. Even neurons die, and (as discovered recently) new ones are generated, even throughout adulthood. These physical changes are thought to be responsible for our experiences.

Even simple physical changes in the brain can cause large changes in perceptions, thoughts, and feelings.

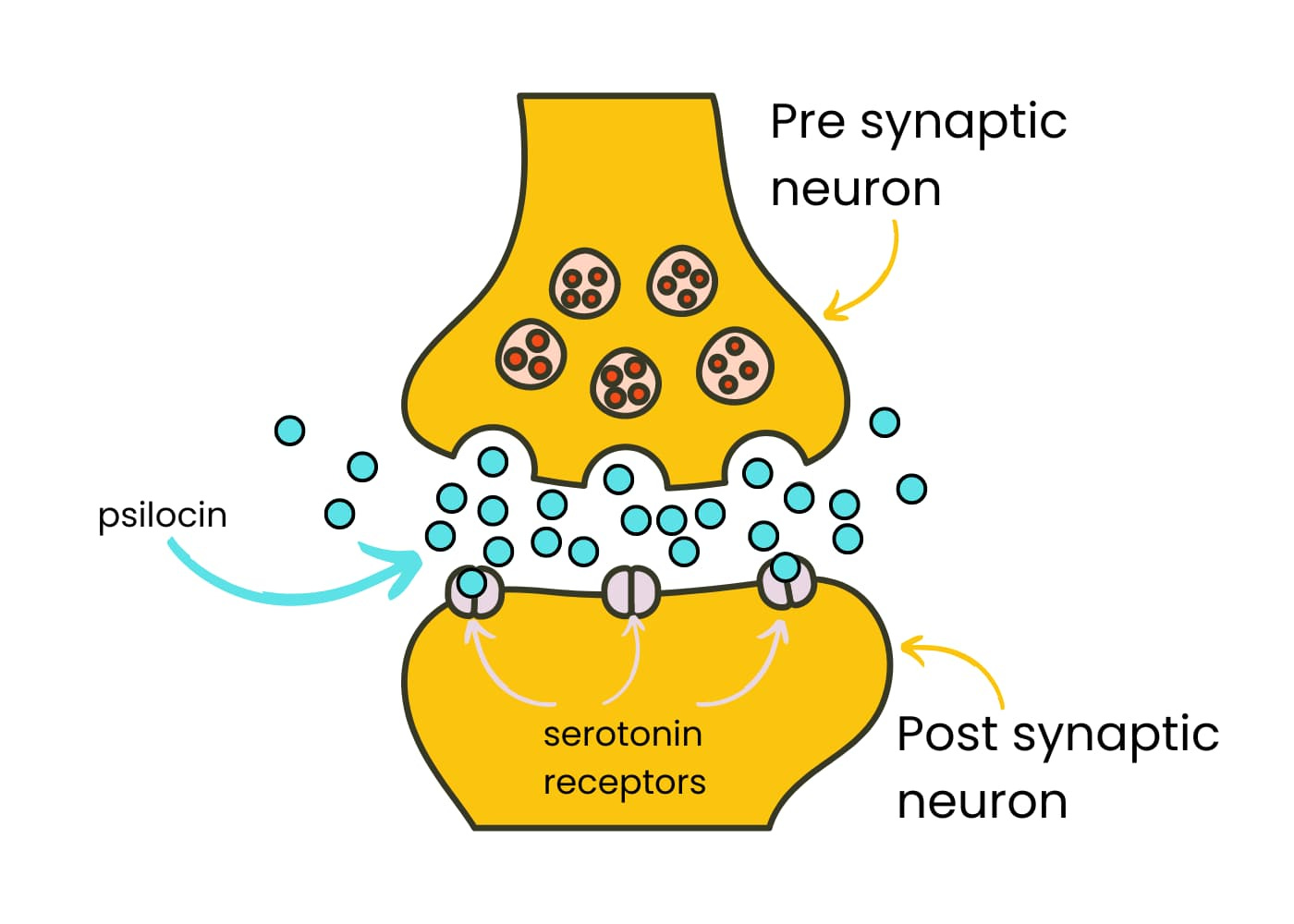

Anyone who has taken a psychedelic substance can confirm that seemingly minor physical changes can have dramatic effects on our conscious experiences.

Consider psilocybin (the active compound in magic mushrooms). Psilocybin is metabolised into psilocin, which just so happens to be structurally very similar to one of the brain's key neurotransmitters — serotonin.

To oversimplify it, when psilocin gets into the synapse of a neuron with serotonin receptors, it can do what serotonin would normally do but with amplified effects.

The result? Profound changes in perception, cognition, and emotional state that we recognise as a psychedelic experience. This chemical change leads to a radically different conscious experience.

Effects like the ones seen with psychedelic substances suggest that if there is a line to be made between the brain's hardware and software, the line is a blurry one.

This leads to an important distinction between brains and computers.

In computers, because software can be copied and executed on different hardware, a computer’s software (the functions it performs) is independent of its hardware. Software does not die if the hardware fails. Software is immortal.

But this is not true for brains. If the brain’s hardware dies, its functions die, too. Unlike computers, where software can be transferred or backed up, the brain's functions are inextricably tied to its physical structure. The brain’s functions are mortal.

Could mortality be the key? Is there something about being mortal that is fundamental for consciousness?

Q2: Could Biology be the Difference that Makes a Difference?

To address this question, I'll draw on ideas presented in two key articles. The first is a recent paper by Alexander Ororbia and Karl Friston, which explores the distinction between mortal and immortal computation. The second is an article by Anil Seth, where he advances his argument that consciousness might be fundamentally dependent on biological processes.

It's important to note that both of these articles are pre-prints, which means they are early-stage research papers that have not yet undergone the rigorous peer review process. So, while the ideas presented here are intriguing, they should be considered preliminary. These concepts may evolve or change significantly as they face scrutiny from other experts and are tested against experimental evidence.

Let's begin with Ororbia and Friston's ideas. In their paper, they make a simple statement with important implications. They highlight that the key to a living system’s survival is that system’s ability to maintain a clear boundary between itself and the external world.

This will be an important concept, so let’s unpack.

Consider a simple biological system. It is vital that this simple system maintains a boundary between itself and its surroundings.

A clear boundary between self and the outside world helps the biological system survive in a few key ways. It helps the system identify what it needs to protect from what might be dangerous threats or important resources. It also allows the system to regulate what enters itself and what exits. And it provides a way to clearly distinguish between the system's own status and the status of the external world.

Digital computers don’t need to do this. Immortal computational systems function without concern for their own existence, so they don’t need to know where they begin and end. But because the functions of a biological system depend on the integrity of its physical ‘hardware’, to continue to perform its functions, the system must maintain its physical boundary to ensure its survival.

According to Anil Seth, this fundamental difference between mortal and immortal systems is critical for consciousness. He thinks this difference might explain why replicating consciousness in artificial systems may be challenging.

Let’s unpack Seth’s claims.

A biological system changes — it moves in its world, requires resources, and needs maintenance. So, its internal status is in constant flux. But the biological system is not the only thing that changes. The external world changes, too. To survive, the biological system must monitor both its internal status and the status of its external world.

For example, consider a lion hunting in the savanna. It must constantly monitor its internal state—its hunger level, energy reserves, and how it is physically positioned in the world. At the same time, it needs to track changes in its environment—the movement of potential prey, the presence of competitors or threats, and shifts in weather conditions. The lion's survival depends on its ability to monitor these internal and external factors.

Seth explains that we monitor our internal and external worlds by generating predictive models. The predictive process that Seth describes aligns with a prominent theory of how the brain works. So, let’s briefly review that theory.

The Predictive Processing Theory

The Predictive Processing Theory, developed by neuroscientists like Karl Friston and popularised by philosophers such as Andy Clark, offers an explanation for how our brains process information.

The theory proposes that the brain is essentially a prediction machine. According to this view, our brains constantly generate hypotheses about the world.

Our brains aren't like passive radio receivers. We don’t simply take in whatever signals the world sends our way and broadcast them as an accurate internal representation of those signals. Instead, we are constantly predicting ourselves and our world. We use the signals from our bodies and the world to update those predictions.

This can sound a bit abstract, so let's consider an example.

You might be a lover of classical music (or perhaps the bagpipes are more your thing). Whatever your go-to tune, when you listen to your favourite song, your experience is not just about the notes you hear at any precise moment. It’s also about what you have just heard and what your brain predicts you will hear in the near future.

We are constantly making predictions. These predictions are not exact. They are just approximations — our brain’s best guess — based on prior experiences and the current context. We use these best guesses to navigate our world. When we hear our favourite song, see a familiar face, or navigate a crowded room, we're experiencing our brain's best guess about the causes of the incoming signals.

If your favourite song unexpectedly stops or the melody changes, you would have made what scientists like to call a prediction error. But we usually just call it a surprise. Any differences between what we predict and what actually happens are used as feedback to update and refine our future predictions.

While the Predictive Processing theory doesn't make strong claims about consciousness, some researchers, like Anil Seth, propose that consciousness is closely related to the predictive process. Seth’s ideas also align with biological naturalism — the view that consciousness is biological — a view most notably associated with John Searle. Building on these foundations, Seth suggests that the things we are conscious of are given to us by the brain’s best guesses. In this way, prediction might be the primary function of consciousness.

As we explored above, prediction is fundamental to the survival of any mortal system. Failure to do so usually means death. So, prediction and survival are closely linked in biology.

But what about computers?

The relationship between prediction and survival, which we see in biological systems, doesn't hold true for digital computers.

While computers can perform complex functions and even run sophisticated predictive models like brains do, they don't maintain themselves in the same way living organisms do. If a computer fails to perform its predictive functions correctly, it doesn't risk death in any meaningful sense. Because the software running the prediction model is entirely separate from the hardware it runs on, a software’s existence doesn't depend on the accuracy of its predictions or computations.

Some who are convinced by Seth’s logic might feel a sense of relief. The difference between mortal and immortal computation would make the possibility of conscious AI unlikely. Following this reasoning, if the function of consciousness in mortal systems is to generate predictions that enhance survival, then the absence of the survival-driven need in computers is a significant barrier to artificial consciousness.

But even if Seth’s claims convince us, we may not want to breathe a sigh of relief just yet.

Q3: What if we designed digital computers to function more like brains?

Geoffrey Hinton — often dubbed the grandfather of artificial intelligence — recently proposed creating mortal computers.

He suggests that while the separation between software and hardware provides many benefits, it may limit our ability to create more efficient and potentially more intelligent AI systems.

He proposes the development of mortal computing in which hardware morphs as the computer learns—similar to how a brain morphs when it learns. And like a brain, the procedures the computer discovers would only work for that particular hardware, so the functions would be mortal—they would die if the hardware fails.

Hinton's main motivation for such a system is its significant energy savings. Mortal computers will likely be a little less accurate but far more energy-efficient than immortal ones.

If Anil Seth is correct and properties of life, such as mortality, are necessary for consciousness, could these mortal computational systems be conscious?

It’s important to note that Seth does not make the strong claim that properties of life, such as mortality, guarantee a system will be conscious, only that properties of life may be necessary for consciousness.

With that caveat in mind, we may still wonder whether mortal computing systems might behave in similar ways to biologically mortal organisms. Would they have similar biological drives to maintain a clear boundary between themselves and the outside world? Would they generate predictive models of themselves and their environment? And if they did, would this mean these systems were conscious? Or would they still lack something important?

It's worth noting that while the Predictive Processing theory has gained significant traction in neuroscience, not everyone is convinced by its claims and it is certainly not the only view about how the brain works.

If you would like to learn more about the possibility of mortal computers, I recommend watching the following lecture by Geoffrey Hinton:

Thank you.

I want to take a small moment to thank the lovely folks who have reached out to say hello and joined the conversation here on Substack.

If you'd like to do that, too, you can leave a comment, email me, or send me a direct message. I’d love to hear from you. If reaching out is not your thing, I completely understand. Of course, liking the article and subscribing to the newsletter also help the newsletter grow.

If you would like to support my work in more tangible ways, you do that in two ways:

You can become a paid subscriber

or you can support my coffee addiction through the “buy me a coffee” platform.

I want to personally thank those of you who have decided to financially support my work. Your support means the world to me. It's supporters like you who make my work possible. So thank you.

As a layperson, my first response to the idea that mind is software and brain hardware was WHAT? My resistance stemmed from my emotional sort of horror that the mind is premade and downloaded into the brain by the authorities, possibly ethereal. But then I thought, well, mind software could be different from computer software in that the brain might write its own code or software in response to experience. I’m going to have to let a day or two pass while I think about it and then reread your post which, btw, is stunning. I love the way you teach through writing! It’s such a rare talent.

Anyway, I realize that we are talking analogies and metaphors with these terms, but mortality with regard to both humans and computers is not a metaphor but a fact. And the idea of consequences seems to me to make a crucial difference. I just published a post of a talk I had with Claude in which I asked Claude for the best directions to an airport during rush hour. I didn’t see it while writing, but the difference between Claude’s output and my input was this: Claude could predict nothing, and prediction was everything to me. The difference was this: I would be the one in the car driving. I had skin in the game. What Claude and I were talking about meant something to me. Claude more or less admitted that it meant nothing to “him” and told me to consult a traffic app for in the moment data. Claude is not only not mortal, Claude is not “in the moment.” Human consciousness came about because of its survival benefits. I can’t imagine consciousness in what we know is inorganic, purely physical like a fence post. Suzi, please critique my reasoning here if I’m completely off topic. I may just be rambling—proof that this isn’t coming from a bot:)

Oh, and I don’t respond to the word “mortal” in the way Mark Young does. I do think it’s clever, the point about Russian drones. It makes me think of suicide. AI isn’t ever “suicidal” is it? Can a rock or a stoplight be suicidal? I think mortal is a particularly apt word.

Great work, Suzi! The idea of building more organic computer systems has fascinated me for a while. Particularly imbuing them with properties like mortality. But it strikes me that will be a very difficult process. It just sort of reduplicates the question of cultivating in a computer system true awareness about its own processes including their limits. Technically, all computer systems today are just one environmental or systemic disaster away from erasure, and yet current system can only report on their own internal processes to the extent that we can program that process inside the vectors of machine learning or other emergent processes.